.

KUEHNE & NAGEL SHIPPING TRACKING SYSTEM

USER MANUAL

SYSTEM OUTLINEThis system is intended to simplify and improve on the existing excel based system currently in place making it quicker and easier to perform the basic functions required. The existing system can be broken down into the following key steps:

- A list of OPEN PURCHASE ORDERS, in the form of an excel spreadsheet, is extracted from Syspro using an existing visual basics program based on a user entered date range. This list includes local suppliers so these suppliers are manually deleted from the list. This list is then emailed to K & N.

- Certain suppliers in the list generated in step 1 are to be sent to LOCTON. Those suppliers that do not need to be sent to LOCTON are deleted from the list and the list is then emailed to LOCTON.

- Open orders generated in step 1 and sent to K & N are taken as being in a status of 0. To avoid confusion only the first weeks open orders in the list generated in step 1 are placed in a second excel spreadsheet called the K & N status report and emailed to K & N. K & N will update this data with details on the status of each purchase order line and send the list back to ZF. This process is repeated until a purchase order line is complete and it is placed in a status of 99.

- A third excel spreadsheet called the container requests list is updated daily with details on all containers that are expected to arrive. The items in these containers are placed IN TRANSIT in Syspro and a GRN is created.

- When items physically arrive a warehouse transfer is performed in Syspro and the items are placed in the AC warehouse.

This entire process will be replaced by the new XML based system and this guide provides detailed instructions on how to perform the steps above using the new system.

1. EXPORTING THE XML FILES

The logic in the existing visual basics program, used to generate the initial list of open orders, was used as a base for the XML export function in the new system. This function can be accessed from the Export XML File button on the Import XML file tab as shown below:

The following screen will be displayed:

The user input required for this function is exactly the same as was required for the existing visual basics program. The user must supply the start date or week and the number of weeks after the start date, giving the end date, to be used as the range of LATEST DUE DATES when extracting open orders from Syspro.

The RETRIEVE ORDERS function extracts the K & N open orders list, the Locton open orders list and the K & N status report and displays these lists to the user. The K & N open orders list is exactly the same list that was generated by the existing visual basics program except that it excludes all suppliers that have a currency of ‘R’ thereby excluding local suppliers and it excludes Locton suppliers. The Locton open order list is a subset of the K & N open orders list generated by the existing visual basics program.

The system has a function for maintaining the list of Locton suppliers (Please see the Query Database functions for details on the Locton supplier list maintenance function). The K & N status report is a combination of the first weeks K & N open orders, the start date entered plus 6 days, and the existing orders in the new systems SQL database that are in a certain status. A status list table is contained in the new systems SQL database that defines the status values to include when generating the K & N status report (At present all lines that have a status of 10 or less will be included). This status list does not have a maintenance function but a system administrator could easily maintain this list if required.

If the lists displayed to the user are correct the user can export them to XML files by clicking the Export XML Files button. The exported xml files can be found in the C:\KUEHNE & NAGEL SHIPPING TRACKING SYSTEM\XML\EXPORT folder on the users PC. The file names are based on the current date and time so that a history of exported files can be kept as shown below:

· 2007-01-30_03-39 PM_KN_OPEN_ORDERS.XML

· 2007-01-30_03-39 PM_KN_STATUS_REPORT.XML

· 2007-01-30_03-39 PM_LOCTON_OPEN_ORDERS.XML

These files can then be emailed to the required people.

2. IMPORTING THE K & N STATUS REPORT

The 2007-01-30_03-39 PM_KN_STATUS_REPORT.XML file generated with the export XML files function would have been emailed to K & N and they would have updated the information in it as required and then sent it back to ZF. In the existing system this would have been done using the K & N status report. The K & N Status Report and the Container Requests List in the form of excel spreadsheets are used by the existing system to track and maintain the status of containers and items but these lists must be manually maintained in the existing system.

The new system stores this information in a more efficient and reliable SQL database and uses the standard XML file format to import and export the data into and out of the SQL database. The new system also provides advanced functions to query, sort, filter and maintain the data in the SQL database replacing the need for the K & N Status Report and the Container Requests List.

Importing the K & N status report XML file into the SQL database is performed using the function provided on the Import XML File tab as shown below:

Because the K & N status report XML file can contain a fairly large amount of records it may be difficult to validate and correct errors in the file and then import the file in a single sitting. In most cases the user will have to perform other tasks or leave the office during the validation and import process. To avoid starting from scratch each time the system automatically stores the XML files that are imported in the C:\KUEHNE & NAGEL SHIPPING TRACKING SYSTEM\XML folder on the users PC so the user can either import a new K & N status report XML file or continue working on a previous one.

The first step is to import a new K & N status report XML file. This is done by clicking the Select File to Import button as shown above. The standard windows file open screen will be displayed and the user must browse to the location where the file attached to the returned K & N email was saved.

If the user selects to open the file its contents will then be validated for basic data format errors. The XML file generated by the export function contains a set of rules called a schema defining the required structure of the data in the file. If K & N use the XML file and its schema in the correct manner as demonstrated by the XMLEditor.exe program provided by Cranswick Computing no basic data format errors should ever occur. In the unlikely event that such a data format error does occur the following type of error message will be displayed:

In the above example a date field in the file contained the string “WRONG_DATE_FORMAT” and not a valid date.

In most cases the file will contain no data format errors and its contents will just be displayed to the user as shown below:

At this point a copy of the selected XML file has been created in the C:\KUEHNE & NAGEL SHIPPING TRACKING SYSTEM\XML folder on the users PC and this copy should always be used from this point onwards and not the original file saved from the returned K & N email. The file is named based on the current date and time when it was first imported as shown below

· 2007-01-31_01-53 PM.XML

If the user closes the program at this point and re-opens it the file can be loaded by clicking on the Load Previous Import button. The following screen will then be displayed:

Clicking on the dropdown list displays all previous import files. In most cases there should only be one file per date making it easy to load the latest file.

At this point the user is ready to attempt to import the data into the SQL database for the first time. To do this the Import Data button must be clicked.

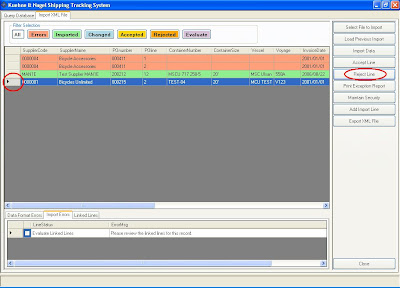

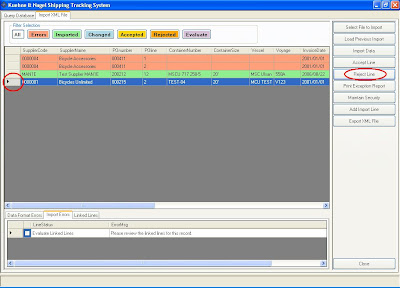

The program will attempt to validate all lines to import. If any error occurs while importing a line it will be displayed in red. If the line passes all validations it will be imported into the SQL database and will be displayed as a successfully imported line in green. Clicking on the left grey area of a line will cause information on why it failed the validations, if any, to be displayed at the bottom of the screen as shown below:

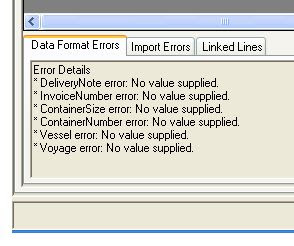

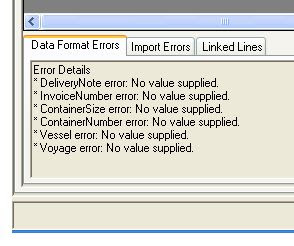

First data format validations will be performed for example if a line has no container number it can not be imported. The bottom of the screen contains 3 tabs: Data format errors, Import errors and linked lines as shown below:

If the line passes all data format validations the import validations will then be performed.

The validation criteria are as follows:

- If no matching line exists (based on purchase order number, line and container) but there are lines that have the same purchase order number and line number then those lines will be displayed to the user for checking in the linked lines tab. The line will be displayed in purple indicating that it needs to be evaluated. The user can then decide to accept or reject the new line. This process is discussed in more detail further on in this section.

- If the line does exist but the vessel, voyage or ETS date has changed the new value will be assumed to be correct and the program will automatically import the line and overwrite the existing values.

- If the line does exist but values other than the vessel, voyage or ETS date have changed the new values will be displayed to the user for checking. The line will be displayed in purple indicating that it needs to be evaluated. The user can decide to discard or accept the new values. This process is discussed in more detail further on in this section.

- The suppler code must be a valid Syspro supplier

- The Purchase order must exists and be in a valid status

- The purchase order line must exists

- The part must be a valid Syspro stock code

- The part number for the line must be correct

- The Invoice Line value will be validated. First the price of the part is extracted as the newest valid contract price for the supplier and part number set up in Syspro’s contract pricing. The lines invoice date must be between the valid from and valid to dates set up for the contract price. The price extracted multiplied by the order quantity for the line must match the invoice line value. Up to 5 decimal places.

- The status will be validated against a status table in the SQL database.

If the line fails one or more of the import validations the errors will be listed in the import errors tab as shown below:

LINKED LINES (Imported from XML file)

If the line has linked lines as discussed in one of the validations above the line will be displayed in purple indicating it requires evaluation. A message will be displayed in the import errors tab indicating this as shown below:

The existing lines in the database that have the same purchase order number and line number will be displayed under the linked lines tab as shown below:

At this stage the user must accept or reject the line. To reject the line the user must first select the line by clicking the grey area to the left of the line and then click the Reject Line button as shown below:

The line will then change to an orange colour indicating it has been rejected.

Any rejected line can be restored by first select the line by clicking the grey area to the left of the line and then click the Restore Line button. The restore line button will only be visible if a rejected line is currently selected.

To accept the line the user must first check the checkbox in the import errors tab, then select the line by clicking the grey area to the left of the line and then click the Accept Line button. The line will then change to a yellow colour indicating it has been accepted.

If the import data function is now run, all accepted lines will be imported into the database and will change to green.

CHANGED LINES (Change by K & N and imported from XML file)

If line values other than the vessel, voyage or ETS date have changed the line will be displayed as purple and new values will be displayed to the user for checking in the import errors tab as shown below:

The user can either, reject all changes by first selecting the line by clicking the grey area to the left of the line and then click the Reject Line button, or accept one or more of the suggested changes. To do this the user must first check the checkboxes of the changes desired in the import errors tab, then select the line by clicking the grey area to the left of the line and then click the Accept Line button. The line will then change to a yellow colour indicating it has been accepted. Also any changes that where not checked will be set back to the original values.

If the import data function is now run, all accepted line changes will be imported into the database and the line will change to green.

CHANGING LINE VALUES MANUALLY

Any value on a line can be changed manually in the Import XML File screen by simply double clicking inside the field to change and typing in the new value as shown below:

Once a value in the line has been changed it will change to a blue colour as shown below:

If the import data function is now run, all changed lines will be validated again to allow the user to correct minor errors immediately.

NB It must be noted that if the user makes a change manually that would normally result in the changes being evaluated, such as changing the container number which would usually cause the linked lines to be displayed, the system will take it for granted that the user desires these changes and will therefore not ask the user to evaluate them but will just import the line.

FILTER BUTTONS

The colour coded buttons at the top of the import XML file screen are used to display only the lines of interest such as error lines. Simply click the desired filter button and the lines displayed on screen will be reduced.

ERROR REPORT

If at any time, after the import data function has been run, and if one or more lines have errors, an exception report can be printed by clicking on the Print Exception Report button. An example of the exception report is shown below:

The report lists all fields for the line and then the import errors for the line. Data format errors, such as blank container numbers, are not printed in this report as these errors are considered obvious and do not require any special attention. If the invoice line value does not match the order quantity multiplied by the parts contract price however, the error may require special attention.

Lines that require evaluation are not considered to be error lines although they make use of the Import Errors tab at the bottom of the screen. As such they are not included in the error report.

SECURITY MAINTENANCE

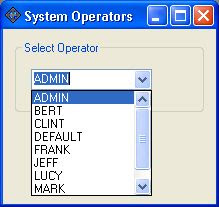

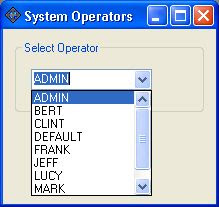

The system has a security facility to enable an administrator to disable certain functionality to certain users if required. This facility is also used to store parameters used by the system that may change from time to time such as the network path to the database server and web services. The security facility can be accessed by clicking on the Maintain Security button. The following screen will be displayed:

The program is designed to be launched from within the users My Menu in Syspro. It can also be launched from a shortcut or MSDOS BATCH script saved in a text file ending in a .BAT extension e.g. TEST.BAT. The users Syspro operator code is passed into the program and is used to record operator activity such as changing data in the database. The Syspro operator code is also used to control access to certain functionality in the system. It may be desirable to limit certain operators to query access only and not allow them to change data in the database.

The program is set to look for a UserSettings folder that can either be on the clients PC or on the server. This folder will contain an XML file for each operator that will use the program. The folder contains a DEFAULT.XML file. If a new operator must be added the operator code is simply typed in the dropdown lists text area (ADMIN above left image) and the Ok button pressed. This will create a new security file for the new operator using the default setting contained in the DEFAULT.XML file. The DEFAULT.XML file can be maintained like any other operator file.

To maintain an operator file select the operator code from the dropdown list and press the Ok button. The following screen will be displayed:

The progrmID must always be ZFKN_ImportQuery. The setting names must never be changed by anyone other than a Cranswick Computing developer as this may render the program inactive. The setting value is used by the system administrator to control security. If the user must be denied Change Access the SettingValue for the HasChangeAccess parameter must be set to false.

In most cases these parameters must not be changed. It is advised that before changing these setting a developer at Cranswick Computing is consulted with.

MANUALLY ADDING IMPORT LINES

In some cases, such as for BMW suppliers, it may be required to add an import line manually that would not be in the K & N Status Report XML file. To add a line manually click the Add Import Line button. The following screen will be displayed:

The information required must be entered in the fields provided and when the Save button is pressed the line will be added. After the line has been added the Import Data function must be run to validate and import the added line. The Line Type can be K & N Sea Freight, K & N Air Freight or BMW Freight.

Only line type of K & N Sea Freight will be considered when exporting the K & N Status Report. All other line types are simply stored in the system and can be queried using the sort, filter and query functions. These lines can also be receipted into Syspro using the system functions.

2. QUERING THE DATABASE

Once the data is in the systems database it can be changed, queried, deleted or receipted into Syspro. All this functionality is provided on the Query Database screen.

To make the system more efficient a default (can be changed in the security settings) of 2 months of data is retrieved. This date range is based on ETS Date. To retrieve the data from the database click the Refresh Data button. This will fetch 2 months of data and display it as shown below:

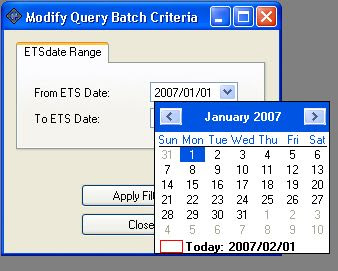

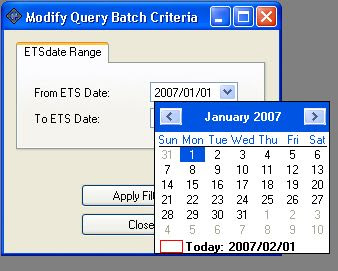

If the user needs to see older records the Modify Query Batch Criteria function can be used to change the range of dates fetched from the database. Clicking on the Modify Query Batch Criteria will display the following screen:

Any range of dates can be selected however as the database grows in size larger query sets will take longer to fetch from the database making the program less efficient. The user must make use of his/her discretion when selecting date ranges.

FILTERING THE DATA

Once a batch of data has been fetched from the database it can be filtered based on a number of filter criteria. Clicking on the Modify Filter Criteria button will display the following screen:

The filtering process searches for substrings in the selected field. For example, if the user wishes to see all suppliers that deal with fasteners the text Fastener can be typed in the supplier name field and the Apply Filters button pressed. Only lines that have the string Fastener within the supplier name will then be displayed, such as Fasteners are us, ABC Fasteners etc.

An exception to this is the date fields and the Status field. For date fields a single date is selected and all lines with matching dates will be displayed. The status field dropdown list allows the user to filter by a single status at a time.

Filters can be concatenated. For example if the user wants to see all lines for a single container CONTAINER_1 but only those for part ABC, then CONTAINER_1 would be entered in the Container Number field and ABC would be entered in the Part Number field.

It must be noted that the filters only apply to the batch of data that was extracted from the database when the Refresh Data or Modify Query Batch Criteria functions where executed. Filtering by a particular container number, for example, does not guarantee that all lines for that container number will be displayed. If one line for the container number has an ETS Date within the selected range at the time of the batch query but the other has an ETS Date outside the selected range at the time of the batch query, then only one line for the container number will be displayed even if the container number filter is used.

PURGING OLD DATA

At some stage data will become too old to be of any interest. In this case the data can be purged using the Purge Old Data function. Clicking the Purge Old Data button will display the following screen:

All record with an ETS data older than the selected data will be deleted.

This function can NOT be undone so care must be taken when using it.

CHANGING DATA MANUALLY

Changing data in the system will be a constant requirement, for example changing the status of a line, and this is done using the Change Selected Line function. First a line must be selected by clicking the grey area to the left of the line and then clicking on the Change Selected Line button. The following screen will be displayed:

Because each line is uniquely identified by the purchase order number - line number - container number combination, none of these fields can be changed. The value of all other fields can be manually changed. The same validations performed during the IMPORT DATA procedure will be applied here except for the EVALUATION of changes to the line as it is assumed that the user changed the line on purpose.

If an error is found a message will be displayed indicating this as shown below:

To make it easier to find a supplier or part number BROWSE functions are included indicated by the looking glass buttons on the right of the part number and supplier code fields. Clicking one of these buttons will display a screen like this:

The list will contain all suppliers or part number in Syspro. The filters can be used to make finding a supplier or part number easier.

The filtering process searches for substrings in the selected field. For example, if the user wishes to see all suppliers that deal with fasteners the text Fastener can be typed in the supplier name field and then the TAB key pressed. Only lines that have the string Fastener within the supplier name will then be displayed, such as Fasteners are us, ABC Fasteners etc.

DELETING LINES

In some cases a line can be found to be invalid or incorrect and it must be deleted. To do this a line must be selected by clicking the grey area to the left of the line and then clicking on the Delete Selected Line button. A confirmation message will be displayed and if the user accepts, the line will be permanently deleted.

This function can NOT be undone so care must be taken when using it.

RECEIPTING LINES INTO SYSPRO

At some stage during the process the items must be receipted into inspection and a GRN created. This can be done at a line level or at a container level.

To receipt a single line select the line by clicking the grey area to the left of the line and then and then click the Receipt Selected Line button. The system will then perform a purchase order receipt in Syspro. The fields used are as follows:

- Purchase Order Number – Purchase Order Number

- Purchase Order Line – Purchase Order Line

- Order Quantity – Order Quantity

- Container Number – Notation

- Bin – Bin

- Delivery Note – Lot Number

If an error occurs during the receipt process an error message will be displayed:

Once a line had been successfully receipted it will change to green. Green lines can not be receipted again.

To receipt a container the filters must first be used to ensure that only a single containers lines are displayed. If more than one container lines are displayed an error message will indicate this. Only the container number filter must be active at the time of receipting a container to avoid missing lines. If other filters are active at the time of receipting a container an error message will indicate this.

It must be noted that the filters only apply to the batch of data that was extracted from the database when the Refresh Data or Modify Query Batch Criteria functions where executed. Filtering by a particular container number, for example, does not guarantee that all lines for that container number will be displayed. If one line for the container number has an ETS Date within the selected range at the time of the batch query but the other has an ETS Date outside the selected range at the time of the batch query, then only one line for the container number will be displayed even if the container number filter is used.

Once all the containers lines are displayed correctly the container can be receipted by clicking the Receipt Container button. The program will attempt to receipt each line for the container separately. If the line has already been receipted it will just be ignored. If an error occurs while receipting the line the program will try to receipt the next line until all the lines have been receipted. At the end of the process any errors that occurred will be displayed in a message box to the user. Once the error has been corrected, the can try to receipt the container again or just the line/lines that failed.

COMMENTS

Comments can be maintained against each line in the database. This can be useful for providing extra information about the status of a particular line. To maintain comments for a line select the line by clicking in the grey area to the left of the line and then click the Comments button. The following screen will be displayed:

Edit the comments and click the Ok button to save your changes.

STATUS TABLE MAINTENANCE

The status values used in the system are stored in a status table. These values can be maintained using the Maintain Status Table function. Clicking on the Maintain Status Table button will display the following screen:

This function works like an excel spreadsheet. To add a new line simply type in the fields next to the * on the bottom left of the screen. To change a value click inside the field and change the cells value. To delete a line, select the line by clicking on the grey area to the left of the line and hit the DELETE key on your keyboard.

BIN TABLE MAINTENANCE

The bin values used in the system are automatically generated each time a new container number is added or imported. The last used bin number is incremented by 1 giving the next bin number to use. Because this process is automated these bin values should never need to be maintained but if for some reason the user must maintain a bin number they are stored in a bin table. These values can be maintained using the Maintain Bin Table function. Clicking on the Maintain Bin Table button will display the following screen:

This function works like an excel spreadsheet. To add a new line simply type in the fields next to the * on the bottom left of the screen. To change a value click inside the field and change the cells value. To delete a line, select the line by clicking on the grey area to the left of the line and hit the DELETE key on your keyboard.

LOCTON SUPPLIER TABLE MAINTENANCE

The list of Locton supplier values used in the system is stored in a table. These values can be maintained using the Maintain Locton Suppliers function. Clicking on the Maintain Locton Suppliers button will display the following screen:

This function works like an excel spreadsheet. To add a new line simply type in the fields next to the * on the bottom left of the screen. To change a value click inside the field and change the cells value. To delete a line, select the line by clicking on the grey area to the left of the line and hit the DELETE key on your keyboard.