Quick links

Project Summary

Project Overview

My Roles

Feasibility Study

Detailed Requirements Analysis

System Design

First Release Prototype

Design Problems

Phase 2

Documentation

APPENDIX A - Feasibility Document

APPENDIX B - Cranswick Feasibility Document

APPENDIX C - Program design & implementation examples

Export XML Sequence Diagram

APPENDIX D - The Domain Model

APPENDIX E - Simple Browse Utility

Project Summary

Name: Shipment Tracking System

Dates: 2006/09/01 until 2007/02/01

Employer: SELF EMPLOYED – Working for Cranswick on a contract basis

Client: Cranswick Computing / ZF Lemforder S.A

Cranswick Computing – Web site: http://www.cranswick.co.za/

Email Adrian the project leader: adrian@cranswick.co.za

ZF Lemforder S.A - http://w

ww.zf.com/f/content/en/import/2/startseite/standorte_1/afrika/Rosslyn.html

Syspro ERP – http://www.syspro.com/

Project Overview

This is my main project report. I have tried to keep it short and to the point in the main body of

the report and have included all extra details in APPENDIX sections at the end of this document.

ZF Lemforder South Africa (Roslyn branch) manufactures front & rear axles for various

passenger and commercial vehicles including certain BMW models. The existing system that

was in place at ZF and was to be replaced by this system was intended to track the status of

parts being shipped from suppliers in Germany to South Africa and then transported to the

Roslyn warehouse.

ZF runs Syspro ERP and purchase orders would be created in Syspro for parts required from various suppliers used to assemble the final manufactured items. Kuehne + Nagel, a global logistics company http://www.kuehne-nagel.com/ , was responsible for transporting the parts from the supplier in Germany to ZF.

Certain parts require special packaging before transport and in these cases the items would need to go via LOCTON (packaging company) before being placed in a container. Because a container size is fixed certain purchase order line quantities may have to be split across many containers or a single purchase order may be split and have detail line items in many containers. The supplier invoices would also not always tie up exactly to the ZF purchase orders in some cases.

In order to ensure that the manufacturing lines at ZF function efficiently they need to know what parts will be arriving at the ZF warehouse each day and how many.

This system was intended to replace an existing system that had a VB6 program used to export data from the Syspro ERP SQL Database. Various Microsoft Excel spreadsheets were used to query and maintain this data. The existing system relied heavily on manual processes and Excel and had very little data validation procedures. One of the spreadsheets was emailed to a person at Kuehne + Nagel and manually updated by this person and then emailed back to ZF for further processing.

ZF contacted Adrian at Cranswick Computing and asked him to present them with a proposal for a new improved system aimed at addressing these core problems:

- There are too many data sources (SQL, Excel) and user interfaces (VB6 program, Excel, Syspro) in various locations making the existing system difficult to use, support and maintain.

- There are not enough validation procedures and too much room for human error.

- Since the data is spread across many Excel spreadsheets and the Syspro SQL database,

querying the data is difficult. - The required Syspro functions such as a purchase order receipt need to be manually run

from within Syspro. - Kuehne + Nagel has an XML based EDI interface that ZF could make use of to automate

certain data exchange procedures currently performed using Excel spreadsheets and

email.

Adrian then contacted me and asked me if I would like to take on this project and I accepted.

My Roles

I took on the roles of systems analyst, programmer, technical architect and project manager and Adrian took on the roles of business analyst and project leader. The project was small enough that I did not have to make use of any of Cranswick’s in house programmers.

Feasibility Study

Adrian and I went to ZF for an initial meeting with the primary user of the existing system, ZF’s IT Manager and a ZF systems administrator to establish the base requirements for the proposed system.

With these in hand I had to produce estimates of cost and delivery timescales and suggested solutions to meet the basic requirements.

I compiled a document and sent it to Adrian for review. Please see APPENDIX A for a copy of the feasibility document.

Adrian then compiled another document containing any amendments and Cranswick’s markup on the proposed systems cost and emailed it to ZF for review. Please see APPENDIX B for an extract from this document.

Detailed Requirements Analysis

The development methodology that I used while working at Cranswick and during this project was similar to other known agile methods such as XP (Extreme Programming). Agile methods are a good fit with the type of projects Cranswick typically takes on. Most projects were fairly small to start with and in time new requirements were identified and incorporated into the projects. Ultimately most projects would evolve into fairly large complex systems.

To manage the evolution of systems better I have found that phasing projects can offer some advantages. It makes it easier for the customers to absorb the costs of software development.

Requirements can be classified in order of importance and projects can be phased to address crucial requirements first. The cost of the first phase of development will be lower and it is then more likely that the customer will be willing to go ahead with the project because of the reduced risk. If the benefits of the initial phase of development are actually realized by the customer they can decide to move on to the next phase. Alternatively the project can be terminated with less concern about the loss of the initial investment.

It also becomes more difficult for large, complex and expensive systems to pass user acceptance testing and I have found that identifying core requirements and addressing those in the first release while leaving less important requirements for implementation in a later release can be beneficial for all parties concerned.

In this case the project was split into 2 parts:

- The core functionality used internally at ZF.

- The automation of data transfer to and from Kuehne + Nagel using their EDI

interface.

I visited ZF many times and held several fact finding interviews with the various stakeholders such as the primary users, managers and administrators.

One thing I have noticed is that initially people seem to be too busy to set aside time for the fact finding interviews and usually want to get them over and done with as quickly as possible. After a while they begin to realize the time and effort they could potentially save as a result of the system being developed they usually start to want to contribute more and this is when most of the detailed requirements are identified. This is another reason why agile methods work well in these types of projects because users will usually want to contribute more after having seen the first release prototype.

In this case the main user of the existing system was a lady who was quite difficult to deal with at the start of the project. It was a huge inconvenience for her to sit in on meetings and fact finding interviews and she seemed to think it was all a waste of time. She did finally come round though but it did make the requirements analysis process more difficult than usual and as a result I did miss certain requirements to start with.

I try to identify as many of the requirements as I can in the timeframe allocated for

requirements analysis but in most cases this will not be ALL possible requirements. This goes against structured systems analysis and design methods or SSADM. I do always try to identify the main requirements driving the system first and then move on to the secondary requirements.

It is difficult to justify the cost of requirements analysis to users and the customer early on in the project and they start to get edgy until a prototype is produced and they can see the results of their efforts and those of the development team as well as the full potential of the software under development.

The requirements analysis phase was repeated several times during the development iterations of this project.

Once the detailed requirements were known I compiled a project plan and identified tasks to be completed. I also prioritized certain tasks and identified dependant tasks and their estimated development times based on the feasibility document. I then provided Adrian with the information for review and we agreed on an estimated first prototype release date and what functionality it would include.

System Design

The first step was to produce a rough use case diagram. I don’t usually use CASE tools to do this, especially for smaller projects, as I see modeling more as a design tool than as a way to record the requirements. Most models produced while working at Cranswick were sketched on paper or a white board and discarded after use. In some cases if the concepts were crucial we would make use of CASE tools or a white board that can print what is on it.

For larger more complex projects with bigger budgets and more resources such as time, developers and analysts I would do things more by the book. I would then use CASE tools to record the requirements using models, a requirements catalogue and a data dictionary etc.

I think that models have 2 main uses:

- As a design tool used to help understand complex systems through abstraction and increasing levels of detail.

- To transfer understanding of the requirements from one person to another.

When there are many analysts and programmers involved in the development process and where the programmers do not interact directly with the users or the customer they must then rely on the ability of the analysts to gain a full understanding of the requirements. The analysts must then transfer this understanding on to the programmers and for this purpose models are very useful.

In my case I was usually present at meetings, prototype demos and fact finding interviews or at the very least was in direct contact with another member of my development team that was present. As a result I think there was less need for the use of models to record the requirements.

The methods taught in books and training courses such as the MCSD (modeling in the MSCD course helps to produces a good database design but does not help with the actual system design) usually follow a sequence of steps. Each step would produce a deliverable that would be used as input into the next step as is the case with OO design. So the steps could be: first produce use cases and the use case diagram, then a system sequence diagram, then produce a domain model, then interaction diagrams, then the class diagram and finally some state transition diagrams and so on.

Following a fixed process worked well for me when I didn’t have the experience to make decisions on my own and needed more guidance. Now I try and understand what these steps are trying to achieve rather than just blindly following them.

A good example of this is the comparison of the business requirements modeling course using Visio in the MCSD track and the use of entity relationship modeling and normalization. Both methods can arrive at a database in fifth normal form but Visio hides a good deal of the decision making process from the user. The user just needs to know what to do next and not so much why they are doing it.

Although this can be an advantage, especially for junior developers, I think it is better to understand why a method works well. This way it can be adapted to better suite your needs and it also helps in choosing between similar methods. Certain methods are not always suitable for the task at hand and in some cases the benefits gained from their use may not outweigh the cost of using them in terms of time and effort.

I think that ER modeling and normalization can be a much faster way to generate a reasonable database design than using Visio and Object Role Modeling. The process of normalization is quite complex and can take a good deal of time itself especially if you want a database in fifth normal form.

However if views, stored procedures, Datasets and Data Adapters are used to provide good database independence to application code then a database in third normal form may be fine to start with. At a later stage the database design can be improved upon and because of the built in data independence the changes in the database design will most likely have very little impact on the application code.

From what I understand, the point of OO design is to arrive at a good responsibility driven design where each object is responsible for a clearly defined task and performs only that task. The three main principles are expert doer, high cohesion and low coupling. By making use of well designed interfaces the implementation of an object can be changed without affecting the other objects it interacts with. Also, if there is an error in the system it is easy to trace which object was responsible for the task that resulted in the error.

Basically good OO design results in a system that is easier to test, debug and maintain and one that will respond to changes in the requirements more gracefully. Things like Dependency Injection of DI and Aspect Oriented Programming or AOP can take this idea even further (Used in the Spring application framework).

I try to identify areas in the design that are unclear to me and I use whatever model would help me to understand the problem better.

For the use cases & use case diagram of this system and some example code please see

APPENDIX C

Entity Relationship modeling would normally be used to produce a good database and functional dependencies, join dependencies and multi valued dependencies would be identified and used to normalize the database by decomposing relations into smaller relations but in this case the database is quite simple so I will not give details of the database design process.

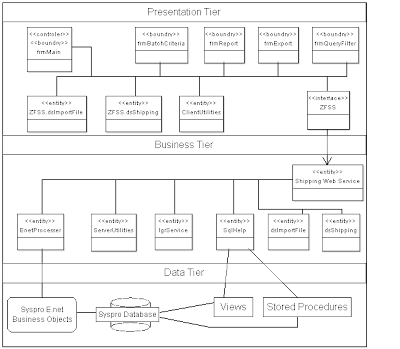

Most of the systems I have worked on since moving from COBOL client-server development to .NET (n-tier) have made use of web services and have been partitioned into 3 layers:

- The data layers which consists of one or more CISAM file data stores (linked to via ODBC), SQL databases, stored procedures, views and functions.

- The business logic layer which consists of web services, related classes and interfaces to integrated systems such as Syspro’s E.net business objects.

- The presentation layer which could be a desktop application, a web front end or a mobile device.

This architecture has worked well in the past for a number of reasons:

- It offers better scalability if the system grows larger in a short space of time in that the processing load can be spread horizontally over more than one server while the interface to the presentation layer remains unchanged.

- It offers automated and remote deployment options.

- It is easier to integrate multiple data sources and to provide a higher level of data independence to application code.

- It is easy to change from local access to the business logic layer, to remote access such as a web interface or mobile device.

- There are less communication problems between client and server and better security because port 80 is usually open on most firewalls.

In some cases using this architecture for very small applications may seem excessive but at most customer sites I have worked at smaller systems usually evolved into more complex ones in time and in these cases the extra effort and complexity paid off.

For the domain model for this system please see APPENDIX D .

Because most of the systems we developed at Cranswick were once off systems and could not be resold to other customers the only opportunities for software reuse were in the form of utilities and components. We were constantly on the lookout for repetition of functionality across systems. I developed a number of utilities and components that could be reused and some of these were used in this system.

One example is the automated deployment service. A runner application is installed on the client machine and is passed command line parameters relating to:

- The program to be run

- The location of the UserSetting.xml file

- The Syspro operator code to identify who is running the program.

The UserSetting.xml file contains:

- The path to the deployment web service

- The username & password for security checks

- Other details about the user.

The runner application will fetch the assembly details from the deployment web service and check the version of the assembly on the client’s machine against the latest version on the server. If the server version is newer than the client version the entire assembly including all of its referenced dll’s etc will be streamed from the server to the client.

Once the latest version exists on the client the runner application will load the assembly, create an instance of it and then run it to start the actual application. The entire process is seamless to the user and all they need to do is click an icon in their Syspro menu.

The automated deployment service was created for a mobile sales order application and was used to deploy applications to mobile phones.

Another example of the use of previously developed components in this system is the simple browse utility. Browsing a list of items such as supplier codes or stock codes etc is a very common operation. With a few lines of code and a simple SQL view a fully functional browse form with filter selections is generated. Please see APPENDIX E for more information.

First Release Prototype

After about 3 or 4 weeks Adrian and I went to ZF to present the first release prototype at a meeting that was attended by the IT manager, 2 of the main system users and an accounts manager that had become interested in the system. I presented the meeting using a projector connected to my laptop and Adrian was on hand to clarify certain points if required.

During the meeting there were a few requirements identified that were not in the original list of detailed requirements. Most of them were fairly simple such as:

- A facility to manually add a line to import rather than importing an XML file.

- The conversion rate used to convert Euro values into Rand values was based on the date of the supplier invoice and the exchange rate for that date. Because Syspro’s internal reports (some standard & some written using Syspro’s report writer) had nowhere in the standard Syspro tables to find the conversion rate used we had to populate one of the user defined fields on a standard Syspro table with the conversion rate used so that it could be displayed on management reports.

Some new requirements were more complex such as:

- The process of receipting purchase orders in Syspro was changed. Purchase order items were now being receipted into inspection and once inspections had passed, they would be receipted from inspection into the actual warehouse. I had originally designed the system to perform a single purchase order receipt directly into the warehouse.

This is a good example of a change in the requirements that would have been unavoidable regardless of how much effort was put into the requirements analysis process. This type of change is quite common when developing in a Syspro environment and is another reason agile methods are well suited.

In this case the change did not have a huge impact on the system code because all required changes were in the web service classes and the interface to the client application remained unchanged. This was a direct result of using the 3 tier architecture and using a good OO design process.

A good change control process is required to manage these types of changes. There is always a debate as to whether or not the functionality was part of the original requirements and cost estimate or constitutes an addition to the original requirements. Good documentation of the decision making process is essential such as who requested the change, when, why and who approved it etc. Vague requirements specifications leave loopholes and the customer can then claim that they were clear as to what they wanted but the analyst did not understand the requirements fully. Making sure that the customer and in fact all stakeholders involved sign off on the requirements specification before development commences helps to avoid these types of problems.

Although I believe that there is a limit to how much time and effort should be allocated to the requirements analysis process, once the list of requirements has been identified they must be documented in a very clear, non ambiguous manner. In some cases it may be necessary to split a single requirement into sub-requirements and specify which of these falls under the scope of current project phase. If this is unclear, more fact finding interviews may be required.

We held many such prototype demo meetings during the course of the project and many new requirements were introduced.

Design Problems

Looking back at the system design and code I can now see many problems as is usually the case when looking at systems you have previously developed after some time. One of the first things I noticed is that the controller form frmMain became quite cluttered. There are too many attributes and methods and this suggests that other objects could have been identified and assigned responsibilities. This is what the high cohesion design principle tries to avoid.

The same thing can be said for the shipping web service. It may have been a good idea to split the web service into 2 or more separate services each offering an interface relating to a more specialized group of functions.

The convenience of double clicking a button and simply adding code can lead to this situation if not monitored. Constant redesign and refactoring of code is required as the system and its requirements evolve.

The use of components and utilities can also help with this as common functionality is removed from the main application code and placed in the components. This allows the application code to focus more on what it needs to do and less on how to do it. (i.e. Controller Object)

Phase 2

The second phase of the project involved automating the process of communication with the Kuehne + Nagel EDI interface. At first Kuehne + Nagel was going to handle the conversion from the ZF file format to their EDI file format and then once they had processed the file they would convert the result back into the ZF file format. This changed for some reason and Cranswick was then required to produce an XML file in the Kuehne + Nagel EDI format. They sent me an example XML file and an XSD schema. I started creating routines to convert the ZF file to the Kuehne + Nagel EDI format by reading a template XML file into a dataset and then working with the dataset in my program code.

At this stage I had to go to Dubai for by brothers wedding and I had to hand over the project to an in house developer at Cranswick. I spent a few days setting up a development environment with him on his machine including all related database, stored procedures, views, test data etc. We also went through the system in detail so that he could understand the requirements and the design.

I made sure that they could contact me via email in case of an emergency but the handover went smoothly and without any problems.

Documentation

Once a system nears completion the user manual and technical installation instructions need to be produced. This is a very time consuming and tedious task for most developers but it is important and should not be neglected. I usually just create a word document or a PDF file but at Cranswick we did start experimenting with a program that records sound and a succession of screenshots to produce a useful image based instructional video with voice instructions and explanations. It is much quicker and easier than typing out a word document and I think could be more easily understood by some users.

For this system I only had time to produce a user manual and because I handed the project over to a developer at Cranswick and the second phase of development was still in full swing he agreed to take on the task on producing the technical installation manual.

<-----End of Report----->

APPENDIX A - Feasibility Document -Please see here.

APPENDIX B - Cranswick Feasibility Document

APPENDIX C - Program design & implementation examples

Some use cases identified

Use case UC-1: Fetch data to export

NOTE: Looking back at this use case I can see a problem. The process of separating the datasets or lists fetched from the web service is being done in the presentation layer. This goes against the design idea of keeping all business logic in the web service. This makes the code difficult to find and as a result makes the system more difficult to maintain. A better option would have been to fetch each list from the web service in turn using a separate web method. On the down side this would mean that each web method that uses the open orders list would need to retrieve the list and this would duplicate efforts and place more load on the database and network. (Unless datasets are cached in the web service)

The use case diagram

NOTE: The Syspro ERP actor participates in the Receipt Purchase Order use case but not in any other use cases such as Fetch Open Orders. This is because Receipt Purchase Order is the only use case that makes use of Syspro’s E.NET business objects. The other use cases will fetch data from the Syspro SQL database directly so these functions are under the control of this system.

The table below shows some of the responsibilities identified that could be handled by various objects.

When a web reference to a web service is added in the client application the Visual Studio .NET IDE automatically generates a proxy class for you that is used as the interface to the web service in the presentation layer. In this case this proxy class is called ZFSS (ZF Shipping Service).

The sequence diagram shown below is for the Export XML use case. There are 2 operations:

- Fetch Export Data for review by the user

- Export XML Files

It is not very clear so I will provide a few details to indicate what is going on. There are 6 classes shown from left to right:

- frmMain: The main controller form of the application

- frmExport: The form controlling the Export functionality

- ProcessExportData: This is a dummy class created because the UML modeling tool I was using (ArgoUML) has a few problems. This class does not actually exist in the application and represents processing that happens within frmExport.

- ZFSS: The ZF Shipping Web Service interface.

- dsOpenOrders: An instance of the dsImportFile dataset class.

- dsExistingOrders: An instance of the dsImportFile dataset class.

The Fetch Export Data operation starts by calling the GetOpenOrders() method of the shipping web service and an instance of the dsImportFile dataset is returned. This dataset is split into 2

separate datasets, one for the K&N open orders and one for the Locton orders. Then the GetExistingOrders() method of the shipping web service is called and another instance of the dsImportFile dataset is returned. The K&N open orders list is combined with the existing orders list to produce the K&N status list. The three lists generated are shown to the user for review. The user can then decide to export the displayed lists out to XML files. That is the

second operation shown in the sequence diagram.

Below the sequence diagram is the domain model diagram. This model would usually display the actors and how they associate with the objects in the domain model but they are not shown in this case.

To help understand the sequence diagram I include a screenshot of the Export XML form

below:

I will now include code showing the process of retrieving the orders. This is a good example of the interaction between the presentation layer, the business layer and the data layer. This is how all the functionality in this application (and most other applications I have developed) is implemented.

Shown below is the code behind the Retrieve Orders button:

private void btnRetrieve_Click(object sender, EventArgs e)

{

//Get open orders and all lines found in the status list

try

{

this.Cursor = Cursors.WaitCursor;

Application.DoEvents();

dsOpenOrders = new dsImportFile();

dsLoctonOrders = new dsImportFile();

ZFSS.Service ZFSS_WS = new ZFKN_ImportQuery.ZFSS.Service();

ZFSS_WS.Url = URL;

int INDEX = cbStartDate.SelectedIndex;

DateTime FromDate = Convert.ToDateTime(cbStartDate.SelectedItem);

cbStartDate.SelectedIndex = cbStartDate.SelectedIndex +

Convert.ToInt32(txtWeeks.Text);

DateTime ToDate = Convert.ToDateTime(cbStartDate.SelectedItem);

cbStartDate.SelectedIndex = INDEX;

//FOR TESTING ONLY

FromDate = Convert.ToDateTime("2005/05/14");

ToDate = Convert.ToDateTime("2007/01/01");

//FOR TESTING ONLY

DataSet DS = ZFSS_WS.GetOpenOrders(FromDate, ToDate);

//This dataset will contain all suppliers so it must be

//split between K&N and LOCTON

DataTable DT_LOCTON = new DataTable();

DataTable DT_KN = new DataTable();

DT_LOCTON.Merge(DS.Tables["Table"]);

DT_KN.Merge(DS.Tables["Table"]);

//NOTE: The query returning the rows of this table

//used a left outer join against the Locton Supplier List

//table so if it is not a Locton supplier the value will be null

foreach (DataRow LR in DT_LOCTON.Rows)

{

if(LR.IsNull("LoctonSupplier"))

{

LR.Delete();

}

}

//foreach (DataRow KNR in DT_KN.Rows)

//{

// if (!KNR.IsNull("LoctonSupplier"))

// {

// KNR.Delete();

// }

//}

//Get rid of the LoctonSupplier columns

DT_LOCTON.Columns.Remove("LoctonSupplier");

DT_KN.Columns.Remove("LoctonSupplier");

dsOpenOrders.ImportFile.Merge(DT_KN);

dgvOpenOrders.DataSource = dsOpenOrders.ImportFile;

dsLoctonOrders.ImportFile.Merge(DT_LOCTON);

dgvLoctonOrders.DataSource = dsLoctonOrders.ImportFile;

dsExistingOrders = new dsImportFile();

ZFSS.dsShipping dsSP = ZFSS_WS.GetExistingOrders();

ZFSS.dsShipping.ZF_ImportDataTable TABLE = new

ZFKN_ImportQuery.ZFSS.dsShipping.ZF_ImportDataTable();

TABLE.Merge(dsSP.ZF_Import);

TABLE.Columns.Remove("Status");

TABLE.Columns.Remove("PartValue");

TABLE.Columns.Remove("Bin");

TABLE.Columns.Remove("Receipted");

TABLE.Columns.Remove("Operator");

TABLE.Columns.Remove("Record_ID");

TABLE.Columns.Remove("LineStatus");

TABLE.Columns.Remove("LineType");

TABLE.Columns.Remove("ImportFileDate");

dsExistingOrders.ImportFile.Merge(TABLE);

dsEXPORT = new dsImportFile();

//To resolve duplicates where the line is still an open

//order in Syspro but it has already been imported into the system

//delete all lines from dsOpenOrders.ImportFile that are also

//in dsExistingOrders.ImportFile based on P/O and Line No

dsEXPORT.ImportFile.Merge(dsOpenOrders.ImportFile);

foreach (dsImportFile.ImportFileRow R in

dsExistingOrders.ImportFile.Rows)

{

string FILTER = "POnumber = '" + R.POnumber + "' and POline =

" +

R.POline;

dsImportFile.ImportFileRow[] to_delete =

(dsImportFile.ImportFileRow[])dsEXPORT.ImportFile.Select(FILTER);

foreach (dsImportFile.ImportFileRow r in to_delete)

{

r.Delete();

}

}

dsEXPORT.ImportFile.AcceptChanges();

//Now ONLY the first weeks open orders must be merged

//into the existing orders list so delete any row from

//dsOpenOrders.ImportFile that do not fall in the first week

//So add one week to the from date

DateTime CheckDate = FromDate.AddDays(8);

foreach (dsImportFile.ImportFileRow R in dsEXPORT.ImportFile.Rows)

{

if (R.ExWorksDate >= CheckDate)

{

R.Delete();

}

}

dsEXPORT.ImportFile.AcceptChanges();

dsEXPORT.ImportFile.Merge(dsExistingOrders.ImportFile);

dgvExistingOrders.DataSource = dsEXPORT.ImportFile;

dgvExistingOrders.AutoResizeColumns(

DataGridViewAutoSizeColumnsMode.AllCells);

this.Cursor = Cursors.Default;

}

catch (Exception exc)

{

this.Cursor = Cursors.Default;

string MES = "The Export Function failed: " + exc.Message;

string TITLE = "Export XML File Error";

MessageBox.Show(MES, TITLE, MessageBoxButtons.OK,

MessageBoxIcon.Error);

}

}

First an instance of the web service interface, ZFKN_ImportQuery.ZFSS.Service() is created. Note that the URL of the web service is set at run time. It is obtained from the UserSetting.XML file that is maintained by the user settings component in the utilities.dll. This makes the application easier to configure and support. The ZFSS_WS.GetOpenOrders(FromDate, ToDate) web method is then executed and it returns a Dataset object. The dataset returned contains both normal orders and LOCTON orders so it is split into two separate lists. The lists are then bound to controls so that the user can review the information.

Next the ZFSS_WS.GetExistingOrders() is executed and the dataset returned is processed. The existing orders list is then bound to a control and the user is then given the opportunity to review the three lists generated and can decide to export the lists to XML.

The GetOpenOrders() web method is shown below:

[WebMethod(MessageName = "GetOpenOrders", Description = "This method returns all

Open Purchase Orders." "It returns null indicating failure.")]

public DataSet GetOpenOrders(DateTime FromDate, DateTime ToDate)

{

try

{

string sCONN = GetConnectionString();

DataSet dsRET = null;

object[] sp_params = new object[2];

sp_params[0] = FromDate;

sp_params[1] = ToDate;

dsRET = SqlHelp.DAL.SqlHelper.ExecuteDataset(sCONN, "ZF_GetOpenOrders",

sp_params);

//If the dataset is null then throw an error

if (dsRET == null)

{

throw new Exception("ZF_GetOpenOrders result DataSet was returned

empty.");

}

return dsRET;

}

catch (Exception exc)

{

Service_EventLog.WriteEntry("Error in GetOpenOrders(): " + exc.Message,

System.Diagnostics.EventLogEntryType.Error);

return null;

}

}

The method returns NULL to indicate failure and writes a log message to the web service event log if an error occurs. The method returns a dataset object that will be merged into an instance of the dsImportFile dataset in the calling procedure. The SqlHelper class hides the code that executes the stored procedure and populates a dataset from the result set returned by the stored procedure.

The "ZF_GetOpenOrders" stored procedure is shown below:

CREATE PROCEDURE ZF_GetOpenOrders

(

@FromDate datetime,

@ToDate datetime

)

AS

SELECT

SysproOutdoors.dbo.ApSupplier.SupplierName,

SysproOutdoors.dbo.PorMasterDetail.MStockCode AS PartNumber,

CAST(ROUND(SysproOutdoors.dbo.PorMasterDetail.MOrderQty, 0) AS int) AS OrderQty,

SysproOutdoors.dbo.PorMasterDetail.MLatestDueDate AS ExWorksDate,

SysproOutdoors.dbo.PorMasterDetail.PurchaseOrder AS POnumber,

CAST(ROUND(SysproOutdoors.dbo.PorMasterDetail.Line, 0) AS int) AS POline,

SysproOutdoors.dbo.PorMasterHdr.Supplier AS SupplierCode,

ZF_LoctonSuppliers.SupplierCode AS LoctonSupplier

FROM

SysproOutdoors.dbo.PorMasterDetail

INNER JOIN

SysproOutdoors.dbo.PorMasterHdr ON

SysproOutdoors.dbo.PorMasterDetail.PurchaseOrder =

SysproOutdoors.dbo.PorMasterHdr.PurchaseOrder

INNER JOIN SysproOutdoors.dbo.ApSupplier ON

SysproOutdoors.dbo.PorMasterHdr.Supplier = SysproOutdoors.dbo.ApSupplier.Supplier

LEFT OUTER JOIN ZF_LoctonSuppliers ON

SysproOutdoors.dbo.ApSupplier.Supplier = ZF_LoctonSuppliers.SupplierCode

WHERE

(SysproOutdoors.dbo.PorMasterDetail.MLatestDueDate >= @FromDate)

AND (SysproOutdoors.dbo.PorMasterDetail.MLatestDueDate < @ToDate) AND (SysproOutdoors.dbo.ApSupplier.Currency <> '$')

RETURN

Note that a left outer join is used on the LOCTON suppliers table so that if no LOCTON supplier is available NULL will be returned. This fact is used in the logic that separates the returned dataset into normal suppliers and LOCTON suppliers. This stored procedure is located in the applications database called ZF_CRANSWICK. The Syspro ERP database is referenced by means of the SysproOutdoors.dbo prefix. I have seen developers place their stored procedure directly in Syspro’s database but this is a bad practice as it clutters up the Syspro database and it makes the system more difficult to maintain. If all related stored procedures and functions for an application are contained within its own database the application is easier to maintain and support.

This code is not ideal because there is still too much processing performed in the presentation layer.

Export XML Sequence Diagram

APPENDIX D - The Domain Model

Domain Model

NOTE: In most cases the applications database would be created on the same SQL server as the Syspro database. In the domain model only the Syspro database is shown but the application would have its own database.

APPENDIX E - Simple Browse Utility

An example of the simple browse form is shown below:

The filters use a LIKE selection on the Code and Name strings to make finding records easier. For instance if “Hardware” was typed into the name filter only suppliers that contain the phrase “Hardware” in their name would be displayed. Because a view is used as the source of the data it could filter suppliers by region or range of parts they supply etc to reduce the size of the returned dataset. Data from multiple tables can be joined to create the dataset. This can

be very versatile.

The SQL statements in the vw_ApSupplier view used as the source for the form data is shown

below:

SELECT Supplier, SupplierName

FROM SysproOutdoors.dbo.ApSupplier

The client side code used to invoke the browse form is shown below:

private void btnBrowseSupplier_Click(object sender,

EventArgs e)

{

try

{

Utilities.frmSimpleBrowse SB = new Utilities.frmSimpleBrowse();

string CODE_NAME = "Supplier";

string DESC_NAME = "SupplierName";

string CODE_TXT = "Supplier Code";

string DESC_TXT = "Supplier Name";

string SOURCE_OBJECT_NAME = "vw_ApSupplier";

string FORM_HEADING_TEXT = "Supplier Browse";

SB.CODE_NAME = CODE_NAME;

SB.CODE_TXT = CODE_TXT;

SB.DESC_NAME = DESC_NAME;

SB.DESC_TXT = DESC_TXT;

SB.SOURCE_OBJECT_NAME = SOURCE_OBJECT_NAME;

SB.FORM_HEADING_TEXT = FORM_HEADING_TEXT;

//Now get the data

ZFSS.Service ZFSS_WS = new ZFKN_ImportQuery.ZFSS.Service();

ZFSS.dsSimpleBrowseData.BrowseDataDataTable SBD =

ZFSS_WS.GetSimpleBrowseData(CODE_NAME, DESC_NAME,

SOURCE_OBJECT_NAME);

SB.SIMPLE_BROWSE_DATA = new

Utilities.dsSimpleBrowseData.BrowseDataDataTable();

SB.SIMPLE_BROWSE_DATA.Merge(SBD);

if (SB.ShowDialog() == DialogResult.OK)

{

txtSupplierCode.Text = SB.RETURN_CODE;

txtSupplierName.Text = SB.RETURN_DESC;

}

}

catch (Exception exc)

{

string MES = "The following error while browsing for supplier: " +

exc.Message;

string TITLE = "Supplier Browse Error";

MessageBox.Show(MES, TITLE, MessageBoxButtons.OK,

MessageBoxIcon.Error);

}

}

The code simply executes the GetSimpleBrowseData()web method to obtain a simple browse dataset dsSimpleBrowseData that contains the view data. It then creates an instance of the simple browse form, sets a few parameters and passes it the dataset containing the browse data and then shows the form.

The web method code is shown below:

[WebMethod(MessageName = "GetSimpleBrowseData",

Description = "This method returns the data required for a simple browse."

+ "It returns null indicating failure.")]

public Utilities.dsSimpleBrowseData.BrowseDataDataTable GetSimpleBrowseData(string

CODE_NAME, string DESC_NAME, string SOURCE_OBJECT_NAME)

{

try

{

Utilities.Utility U = new Utilities.Utility();

string sCONN = GetConnectionString();

return U.GetSimpleBrowseData(sCONN, CODE_NAME, DESC_NAME,

SOURCE_OBJECT_NAME);

}

catch (Exception exc)

{

Service_EventLog.WriteEntry("Error in GetSimpleBrowseData(): " +

exc.Message, System.Diagnostics.EventLogEntryType.Error);

return null;

}

}

The web method is currently in the shipping web service but could easily be moved into a utilities web service.

To use this component each application will need a reference to the Utilities.dll and the web service that contains this web method.

No comments:

Post a Comment